Lesson 1.7: The SEO Pyramid

Article by: Matt Polsky

In the previous lesson, we uncovered the basics of search and briefly touched on the factors that make up ranking. In this lesson, we'll distill further to provide more context around what we do as SEOs.

Where to Start

SEO software company Moz created what they refer to as "Mozlow's Hierarchy of SEO Needs." The graphic does a good job of explaining a typical optimization process.

1. Crawl Accessibility

Crawl accessibility is the first place to start. If Google can't crawl your site, they can't add it to their index.

A few common reasons a site wouldn't be indexable include:

JavaScript

Google has difficulty crawling JavaScript sites when the site doesn't render the HTML. JavaScript is more complicated (not impossible) for Google to crawl, and when sites don't render the HTML, they essentially show Google a blank screen.

The video below is a great example. The St. Louis Browns baseball team site is entirely in JavaScript. When I pull up the Google cached version of the site, it's basically an image with all the text stripped out.

Robots.txt

Every site has what's known as a robots.txt file. Go to any popular website, put the URL in + robots.txt (e.g., https://missouri.edu/robots.txt) and enter.

We'll learn more about this later, but the robots.txt file allows you to tell search engines what they shouldn't crawl. It's the typical starting for any crawler so they understand what they should and shouldn't crawl on a site.

Sometimes, developers add the wrong directive, preventing Google from crawling a site.

For example, this happened to AMC Theatres a few years ago, and we caught it for them serendipitously. They added "Disallow: /" which tells search engines not to crawl the entire site.

Meta Robots Directives

Similar to the robots.txt, you can add directives in the <head> section of a page telling search crawls not to crawl or index a page. If noindex gets set as a directive, search engines won't add the page to the index.

We'll learn more about meta robot directives later, but if you want a page out of the index, use a meta robot directive over the robots.txt. Meta robots is a command, while robots.txt is closer to a suggestion.

Other Factors in Crawl Accessibility

Outside of indexing issues and optimization, the base of our pyramid dives into the baseline architecture of a site.

- Site URLs

- Crawl budget and efficiency (how easy and often search engines crawl a site)

- Canonicalization

- Status code issues (are pages broken and 404, 500, etc.?)

- The site loads in an appropriate amount of time (Googlebot doesn't time out while crawling)

- XML sitemaps are optimized

- More!

2. Compelling Content / Keyword Optimized

I add these two sections of the pyramid together because you typically won't know what content to create with keyword research. Keyword research is at the core of SEO and much of digital marketing. SEOs need to know how to use tools, social platforms, forums, etc., to find what people search for and apply that knowledge to their content.

From the content creation side, Moz says compelling; I say trustworthy. Ideally, you have both, but trust is where to start.

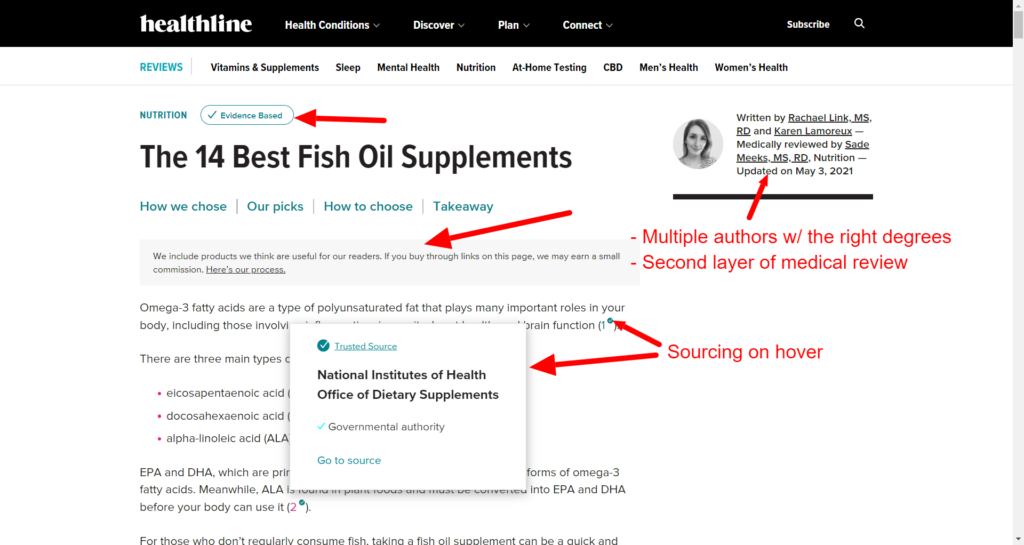

In Panda and the Helpful Content Update, trust is vital. Google wants to know the content you're providing is accurate and from someone who knows the topic. Health and medical are the best niches to review for how other SEOs handle trust. For example, Healthline is doing things like this:

Healthline pulls out all the stops when creating a piece of content. Some of the trust factors Healthline includes in their articles are:

- Authors from the niche

- A review board that reads every article for accuracy

- Sourcing

- Noting the article is evidence-based

- Disclosing any affiliations or ways they make money

The level of trust and sourcing needed varies by niche. Healthline falls in the YMYL niche, meaning they have to be buttoned up.

To be compelling in addition to trustworthy, you need to find ways to stick out. It's not easy to do, but some examples are:

- Breaking down a complex subject into an easy-to-digest, actionable piece

- Providing a unique take on a subject (based on trustworthy, verifiable sources and research)

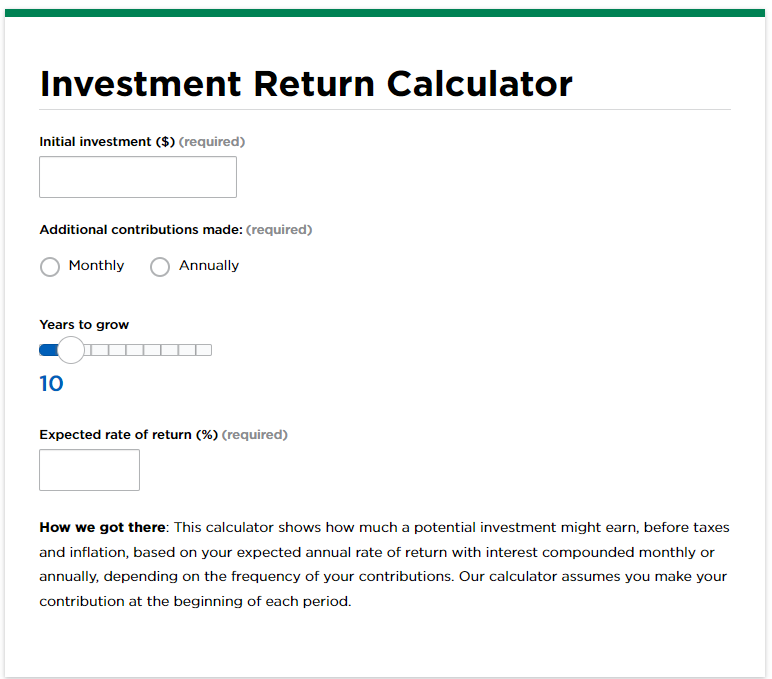

- Creating a tool or calculator that answers a query faster than a 2000-word article

3. Great User-Experience (UX)

UX includes factors that could fall in the first level. Page speed and mobile friendliness are two things that could be baseline accessibility issues. However, they are also UX opportunities.

Other factors to keep in mind for UX are:

- Writing in reverse pyramid style (getting the answer upfront)

- Internal linking to pages in the next step of the user journey

- Ads on the page (Google has algorithms that filter sites with a negative ad experience)

- Readability (shorter, more concise paragraphs)

- Images to illustrate points

4. Shareworthy Content

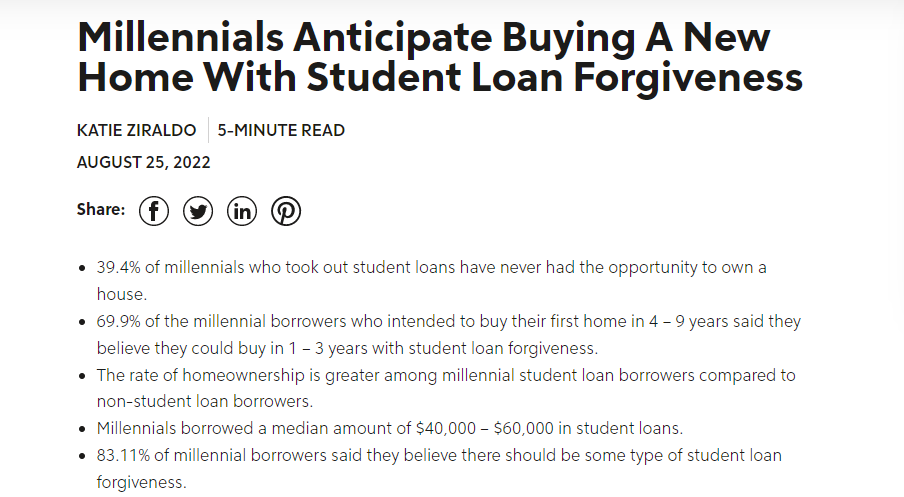

External links still play a significant role in the algorithm – especially when you get in highly competitive niches. Without links, you're not getting that third-party or external validation needed to climb in the SERPs.

Building links typically means creating shareworthy content or pieces that have a hook that journalists want to write about.

5. Title/URL/Description

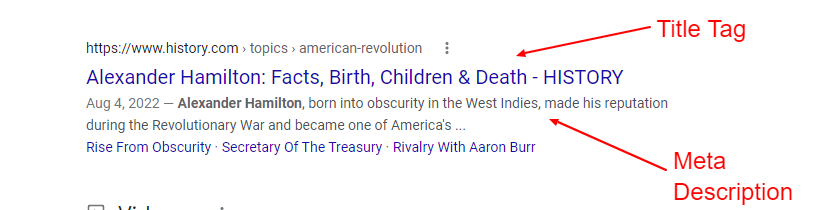

Title tags, URLs and meta descriptions go higher on the list for me - or get combined with crawl accessibility for URLs and keyword optimized for the other two. Building links before making sure these are on point don't make sense from an SEO perspective.

We'll learn more about title tags, meta descriptions and URLs in later lessons. For now, know these play a role in the clickability of the result and are factors users see when performing a search. Also know that Google may rewrite these without your permission if they don't like them.

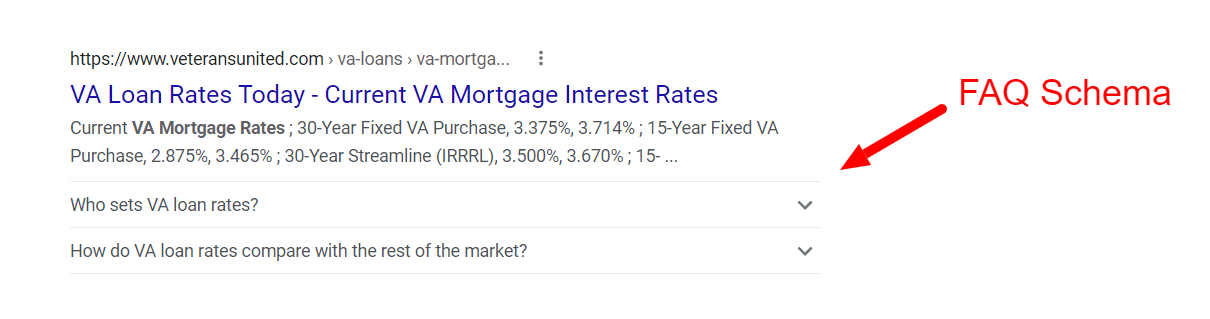

6. Schema/Snippets

The last item on Moz's pyramid is Schema markup. Schema is a JSON or HTML markup you can add to individual pages to help search engines better understand the information on the page. Another benefit of schema is the ability to provide richer results, such as images, accordions and other features.

I'd typically tackle this sooner because schema markup can help a site take up more room in a SERP, which means higher CTRs.

Now you should have a baseline understanding of how Google works and the processes that make up ranking a page in search results. In lesson 2 we'll learn how to uncover valuable keywords for your SEO strategy.