Lesson 7: Generative AI

Article by: Matt Polsky

Generative AI is a type of artificial intelligence that can generate new content—such as text, images, or music—based on the data it has been trained on. Unlike traditional AI, which is rule-based and works within the confines of pre-defined parameters, generative AI can create new data similar to its training input.

What is a Large Language Model?

A large language model, or LLM, is a subset of generative AI specifically focused on understanding and generating human-like text. LLMs are trained on vast amounts of text data, allowing them to predict and generate coherent sentences, paragraphs, and even entire documents. The most famous examples include GPT (Generative Pre-trained Transformer), developed by OpenAI, and Google's BERT (Bidirectional Encoder Representations from Transformers).

How LLMs Work

LLMs are trained using a method called unsupervised learning. They analyze massive datasets containing billions of words and sentences to learn language patterns, grammar, context, and even nuances of human communication.

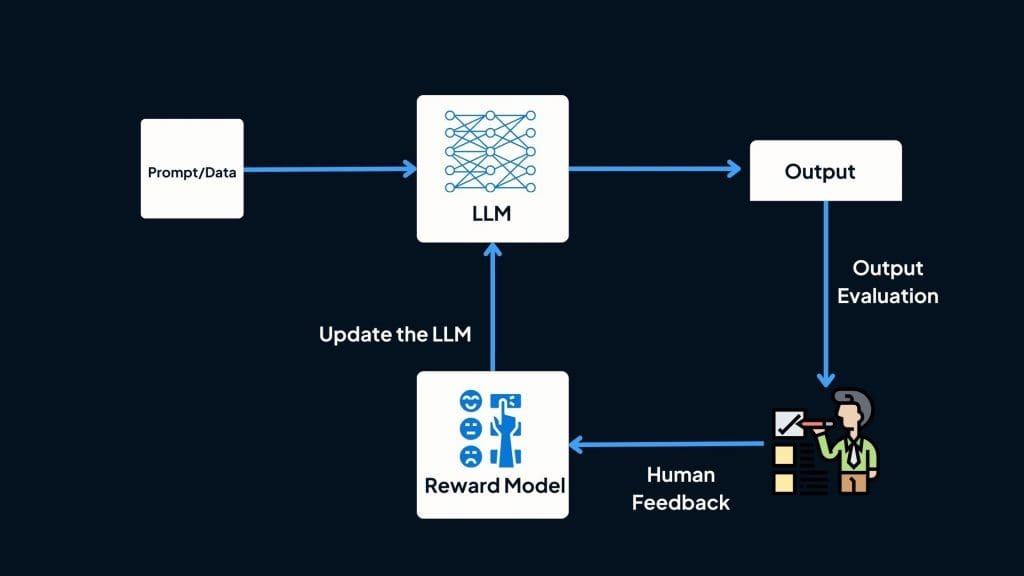

After the initial round of training, models go through an aligning process where where human feedback is used to fine-tune and improve the model's performance.

Reinforcement Learning from Human Feedback (RLHF): One approach used by companies like OpenAI is Reinforcement Learning from Human Feedback. In this method, the model generates multiple outputs for a given input, and human evaluators rank these outputs from best to worst. The model then learns from these rankings, adjusting its parameters to produce more desirable outputs in future interactions.