Lesson 1.6: Historic Algorithms to Know

Article by: Matt Polsky

Below we dive into some of the more noticeable Google algorithms and how these impacted search. For an extensive list of algorithmic updates, Moz does a good job compiling many historic algorithms here.

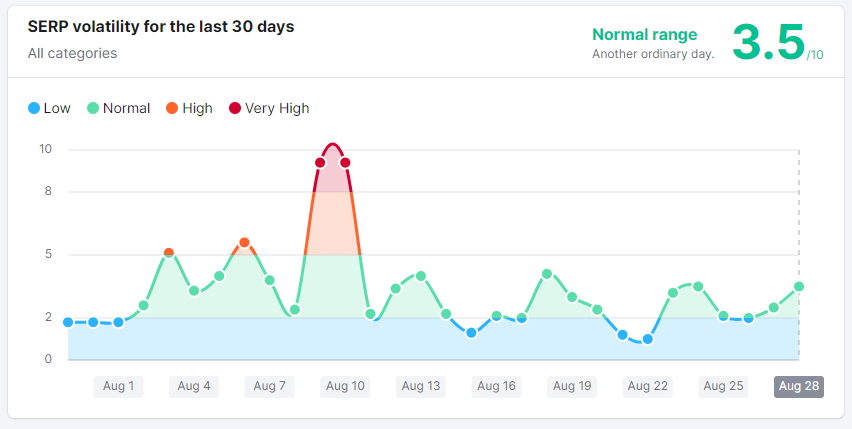

Beyond Moz, some sites do attempt to track every noticeable change as it happens by monitoring an extensive set of keywords and identifying significant moves in those keywords.

These sites include:

Barry Schwartz from Search Engine Roundtable does an amazing job of monitoring all these tools and identifying when fluctuations happen.

Caffeine and Hummingbird

In 2010, Google rolled out "Caffeine." Caffeine essentially made it where Google could crawl, index and rank a piece of content closer to real-time. Before Caffeine, results could take weeks or months to refresh, and fresher or timely content rarely appeared until it was too late.

Caffeine was a complete rehaul of Google's indexing infrastructure. Before Caffeine, Google indexed pages in layers. Results didn't update until every layer refreshed, which could take weeks to months.

Caffeine made it where they could index each layer in parallel instead of waiting for one after another to refresh before displaying the result in the SERPs.

Hummingbird is a separate algorithm that took place in 2015. Hummingbird had many similarities to Caffeine, with the major similarity being it was a complete rewrite of the algorithm to make it more "precise and fast."

However, it also had major differences. With Hummingbird, Google aimed to better understand the meaning behind a search. For example, if you searched, "Where can I get a VA loan?" the previous algorithm would focus on keyword matching and show results for "how to get a VA loan." After Hummingbird, that result began to show content around how to find a lender and local packs.

Panda

Panda hit in February of 2011. Panda targeted article spinning, thin and duplicative content and was absolutely massive, affecting over 12% of search queries.

Article Spinning is rewriting the same article or concept ever so slightly to make it appear as unique.

There are two primary reasons SEOs would spin articles. First, before Google was good at realizing intent, you could spin the same article with a different title to rank for variations of the primary keyword.

For example, you could spin an article about student loan requirements and have pages for "Student Loan Eligibility," "Student Loan Qualifications" and "Student Loan Requirements." Each page targets the same intent but with different titles to target the specific variations of that keyword.

The second reason is for off-site SEO/link building. SEOs would (and still) rewrite the same article and pitch them as "unique" guest posts with the intent of getting a link back to their site.

Before Panda, it was a common tactic to duplicate body content and use different titles and headings to rank better for synonym versions of keywords. For example, a page targeting "FHA loan requirements" rarely ranked highly for the term "FHA loan eligibility" even though the intent was the same. Because of this, SEOs created content for both terms, typically kept the content the same and only changed title and headings because the titles and headings carried more weight.

Panda eliminated these tactics by filtering sites that scraped content from other sites, duplicated their own content, or had similar content topics across their site. Because of the manual nature of the algorithm, sites filtered by Google often waited months for a refresh. It wasn't until 2015 that Panda became part of the core algorithm and began running in real-time.

Google provided guidance to sites hit by Panda that they still reference to this day. You can see their guidelines here and updated guidance they provided after the helpful content update here.

Some of the key takeaways:

- Produce content where you're an expert or authority (or can source one)

- Provide a unique take, sources, etc.

- Review and edit every piece of content - quality control is important

- Create trust with your audience

Penguin

Remember earlier when we said Google designed algorithms to penalize sites that manipulated links? Enter "Penguin."

Penguin became the primary algorithm to fight link spam and affected over 3% of search queries – which is a lot. The first appearance of Penguin came out in April of 2012, with the last confirmed update in 2016 before being rolled into the core algorithm. Google often took months between refreshing or updating this algorithm and, at one point, took well over a year before rerunning it.

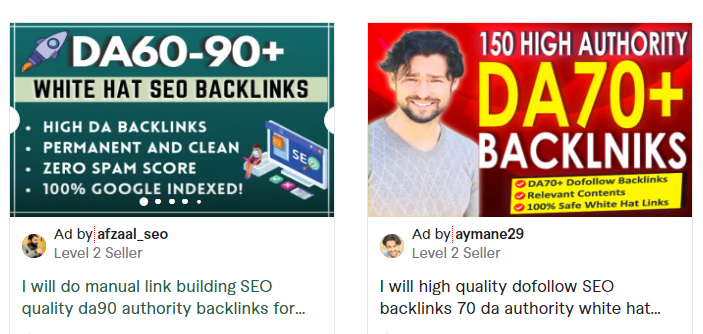

Penguin's target was link spam. Link spam/bad link practices include:

- Buying or selling links that pass PageRank, including trading goods/services for links or providing a free product in exchange for a link.

- Excessive link exchanges ("Link to me and I'll link to you") or partner pages exclusively for the sake of cross-linking.

- Large-scale article marketing or guest posting campaigns with keyword-rich anchor text links.

- Requiring a link as part of a Terms of Service, contract, or similar arrangement without allowing a third-party content owner the choice of qualifying the outbound link, should they wish.

- Using automated programs or services to create links to your site (automated comment links).

In the Penguin era, Google took a hard stance on manipulation. Anyone violating their guidelines received a significant rankings decrease or manual penalty. The hard part for many businesses was that before Penguin, the targeted tactics were commonplace in SEO and marketing. Many businesses affected by Panda and Penguin ended up closing their doors or moving to an entirely new domain.

Living through both Panda and Penguin and seeing online businesses shut down is one of the reasons I'm conservative when it comes to SEO strategy. Focus on the user, build links by providing value, create unique content and try to stay ahead of where the algorithm is going instead of chasing it.

RankBrain

RankBrain introduced machine learning into the algorithm in 2015. RankBrain is a query interpretation model that helps determine if a result actually matches the query's intent.

For example, if you search something like "Super Bowl location," the traditional algorithm may have a tough time decerning if you want the coming Super Bowl, the following years or even the very first. With RankBrain, Google uses user satisfaction to determine if they're displaying the correct results.

RankBrain continually learns patterns in results to determine if that result matches the search intent. One way this happens is from "pogo-sticking." Pogo sticking is when you click on a result, then jump back and click on a different result. When pogo-sticking occurs at scale, RankBrain triggers that a result may not match the intent.

Another usage of RankBrain is essentially A/B testing search results. When Google is confused on search intent, they typically bring lower results to the top or cycle in different results to measure user satisfaction.

Take Some Time to Review

Algorithm updates today are far less detailed than in prior years. For example, we knew Panda targeted content and Penguin targeted links, but today Google typically rolls core updates without context.

Google's guiding light for site owners and SEOs is in this article here. It's broad and covers everything under the sun, which means an essential skill of every SEO is being able to problem solve, find patterns and essentially be a detective to uncover underlying issues. The best way of doing that is to understand prior algorithm updates and the problems Google is trying to solve.

We'll never know every update – Google launches thousands every year – but you can get a glimpse of prior updates through a handful of sites that log the known changes and major fluctuations. Again, Moz is one such company and provides that information here.